List and Describe Io Optimization Algorithms Used in Linux

Broad classes of optimization algorithms their underlying ideas and their performance characteristics. Iterative algorithms for minimizing a function f.

Introduce Linux Io In Detail Develop Paper

Here are some examples.

. A good algorithm is one that produces. 53 Mini-batch with Adam mode. Activeset solve KarushKuhnTucker KKT equations and used quasiNetwon method to approximate the hessianmatrix.

CPU Scheduling is a process of determining which process will own CPU for execution while another process is on hold. There is very little what we can do in the VFS area for tuning the IO performance. Optimization toolbox for Non Linear Optimization Solvers.

An optimization algorithm is a procedure which is executed iteratively by comparing various solutions till an optimum or a satisfactory solution is found. These would be described as ON2. This notebook explores introductory level algorithms and tools that can be used for non linear optimization.

Algorithms are mainly used for mathematical and computer programs whilst flowcharts can be used to describe all sorts of processes. A group of mathematical algorithms used in machine learning to find the best available alternative under the given constraints. In this article we discussed Optimization algorithms like Gradient Descent and Stochastic Gradient Descent and their application in Logistic Regression.

First of all in the application area you can implement features to optimize the IO to make it more efficient. The document has moved here. The first 5 algorithms that we cover in this blog Linear Regression Logistic Regression CART Naïve-Bayes and K-Nearest Neighbors KNN are examples of supervised learning.

The selection process will be carried out by the CPU. A lot of work including. Next Buffer cache is one of the most important part for IO optimization because this is the RAM that is reserved for read as well.

It might take up to 80 of the time for cleaning data thus making it a critical part of the analysis task. This answer is not useful. The linux kernel has several different available scheduling algorithms both for the process scheduling and for IO scheduling.

So flowcharts are often used as a program planning tool to organize the programs step-by-step process visually. It identifies each process either as real time process or a normal other process. If you read the book in sequence up to this point you already used a number of optimization algorithms to train deep learning models.

Show activity on this post. SGD is the most important optimization algorithm in Machine Learning. The main task of CPU scheduling is to make sure that whenever the CPU remains idle the OS at least select one of the processes available in the ready queue for execution.

The process of filtering is used by most recommender systems to identify patterns or information by collaborating viewpoints various data sources and multiple agents. Optimization and non linear methods. Network researchers have been working on new transport protocols and congestion control algorithms to support next generation high-speed networks.

It assigns longer time quantum to higher priority tasks and shorter time quantum to lower priority tasks. It is extended in Deep Learning as Adam Adagrad. Print 1 to 20.

With the advent of computers optimization has become a part of computer-aided design activities. A particular problem can typically be solved by more than one algorithm. The O-notation can be used to describe space complexity also.

This is one of the most interesting Algorithms as it calls itself with a smaller value as inputs which it gets after solving for the current inputs. In more simpler words Its an Algorithm that calls itself repeatedly until the problem is solved. The principle that lays behind the logic of these algorithms is an attempt to apply the theory of evolution to machine learning.

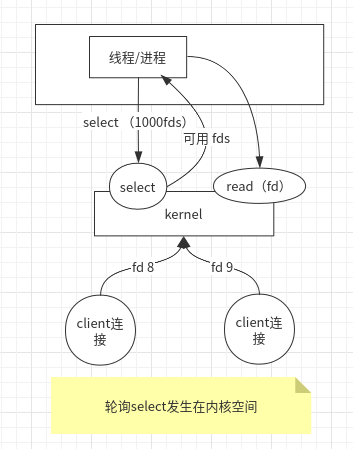

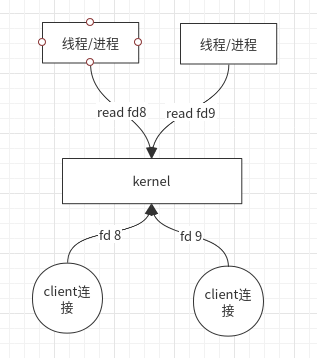

Congestion control algorithm used by TCP is poor in discovering available bandwidth and recovering from packet loss in high bandwidth-delay product networks 1. Business educational personal and algorithms. Flow of IO requests.

Optimization is the process of finding the most efficient algorithm for a given task. They were the tools that allowed us to continue updating model parameters and to minimize the value of the. Genetic algorithms represent another approach to ML optimization.

Intelligent Systems to Support Human Decision Making. Stochastic gradient descent is an easy to understand algorithm for a beginner. 51 Mini-batch Gradient descent.

Algorithm ant algorithm bee algori thm bat algorithm cuckoo search firefly algorithm harmony search particle swarm optimization met aheuristics. Fmincon constrained nonlinear minimization Trust regionreflective default Allows only bounds orlinear equality constraints but not both. Which try to find the minimum values of mathematical functions are everywhere in engineering.

With the rapid growth of big data and availability of programming tools like Python and R machine learning is gaining mainstream presence for data scientists. According to a recent study machine learning algorithms are expected to replace 25 of the jobs across the world in the next 10 years. Mostly it is used in Logistic Regression and Linear Regression.

In the evolution theory only those specimens get to survive and reproduce that have the best adaptation mechanisms. You will get a full list of all available options with a built-in help. Ensembling is another type of supervised learning.

The algorithm used by Linux scheduler is a complex scheme with combination of preemptive priority and biased time slicing. Its similar in a lot of ways to its precursor batch gradient descent but provides an edge over it in the sense that it makes rapid progress in reducing the risk objective with fewer passes over the dataset. 52 Mini-batch gradient descent with momentum.

ℜn ℜ over a set Xgenerate a sequence xk which will hopefully converge to an optimal solution. In this book we focus on iterative algorithms for the case where X. 5 - Model with different optimization algorithms.

There are two distinct types of optimization algorithms widely used today. Machine learning applications are highly automated and self. Optimization the process of improving the speed at which a program executes.

For example many simple sort programs have a running time which is proportional to the square of the number of elements being sorted. Optimization Algorithms Dive into Deep Learning 0175 documentation. It starts with root finding algorithms in 1 dimensions using a simple example and then moves onto optimization methods minimum finding and multidimensional cases.

Illustrate Io Models And Related Technologies For Linux

Belum ada Komentar untuk "List and Describe Io Optimization Algorithms Used in Linux"

Posting Komentar